AI is rewriting how software gets built

AI coding agents are changing software development at an unprecedented pace. Engineers can ship faster than ever, and the amount of AI generated code landing in production is exploding.

But there is a growing mismatch.

AI makes writing code cheap. Maintaining and evolving that code at scale is becoming harder, not easier.

Writing new code is only the beginning. Real systems require continuous upgrades, migrations, refactors, security fixes, and long term consistency. Those changes demand deep context, reliable automation, and coordination across teams and repositories.

AI coding agents excel at local tasks. Enterprise maintenance is a very different problem.

As velocity increases, companies are already feeling the gap. Faster code generation is creating a growing backlog of repetitive, high risk maintenance work that slows teams down and compounds technical debt.

Why generic coding agents hit a ceiling in large scale maintenance

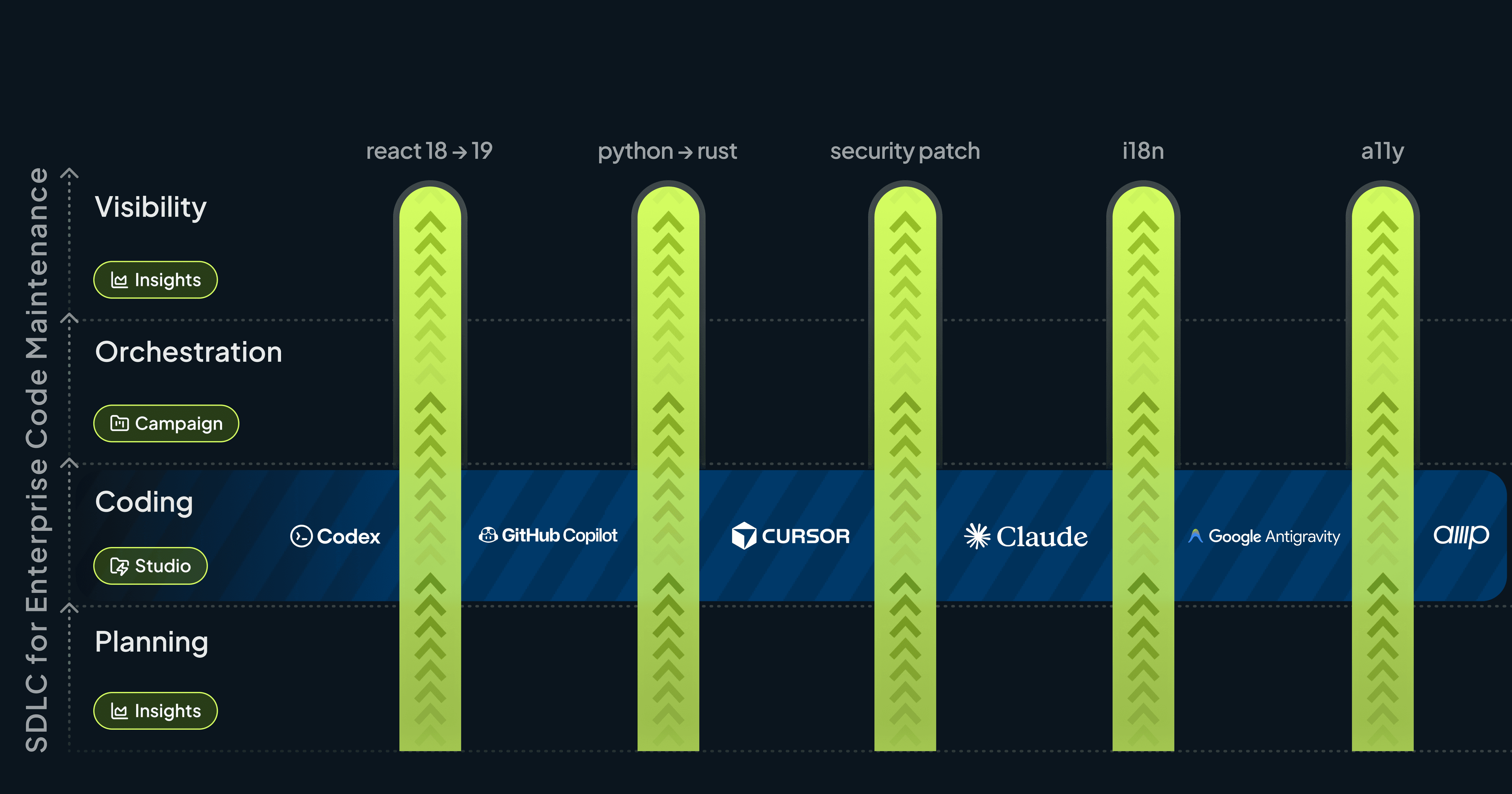

The ecosystem of coding agents has grown rapidly. Tools like Copilot, Cursor, Claude Code, and others are now part of everyday developer workflows.

Despite different interfaces and models, they share a common design philosophy:

- Help a single developer solve the task in front of them

- Stay general enough to work on any codebase

- Optimize for local productivity

This model works extremely well for writing features, fixing bugs, and iterating in an IDE or terminal. It is exactly what individual developers want.

But enterprise maintenance has different requirements.

When teams try to use generic agents for large scale migrations or cross repo changes, the same problems show up repeatedly:

- incomplete context across repositories

- inconsistent transformations between teams

- drift over time as patterns diverge

- slow coordination and review cycles

- high risk when the blast radius is large

These tools were never designed to own work that spans dozens of repos, hundreds of engineers, or months of execution.

Enterprise code maintenance needs something purpose built.

Micro agents purpose built for enterprise codebases

Codemod is designed around a different set of principles, optimized specifically for repetitive, risky, and large scale code changes.

Instead of trying to help with everything, Codemod focuses on doing a few things exceptionally well:

- Owning the job end to end, from planning to execution to tracking

- Specializing in concrete tasks like React upgrades, internationalization, security remediations, or design system migrations

- Serving platform teams, tech leads, and organizations responsible for system wide change

A Codemod micro agent might upgrade React across 120 repositories using the same rules everywhere. Another might apply a consistent i18n transformation across millions of lines of code, with progress tracked and reviewed over time.

Codemod does not replace general coding agents. It complements them by taking ownership of the work they are not built to handle reliably.

When a task is repetitive, spans many repos, or carries high risk, Codemod provides the foundation that makes automation safe and repeatable.

Because Codemod is built for enterprises, it also includes the controls leaders expect by default. Dry runs, approval gates, audit logs, rollbacks, and integrations with GitHub and Jira are part of the platform.

Because Codemod is built for enterprises, it also includes the controls leaders expect by default. Dry runs, approval gates, audit logs, rollbacks, and integrations with GitHub and Jira are all part of the platform.

-1.png&w=3840&q=75)

Codemod’s superpowers make it a natural complement to coding agents

A growing registry of proven micro agents

Codemod captures migration knowledge as reusable micro agents.

Some are generated by Codemod AI from natural language descriptions. Others come from real world migrations performed across enterprises and open source ecosystems. Every micro agent is reviewed, tested, versioned, and validated before it runs in production.

This turns one off migration work into durable assets. Teams can reuse proven agents, adapt them to their codebase, or generate new ones with confidence that they will behave consistently across thousands of files.

Compiler aware infrastructure with real semantic understanding

Codemod operates on a deep semantic model of the codebase, not just text diffs.

This unlocks capabilities that generic agents struggle to deliver at scale:

- automated planning with full repository visibility

- safe and consistent transformations grounded in semantics

- orchestration across repositories with dependency awareness

- progress tracking and insights over time

This infrastructure is already proven in production. Teams use Codemod to upgrade frameworks, internationalize large codebases, and standardize systems after acquisitions, migrate apps from one language to another, all with predictable outcomes.

Turning maintenance into background automation

As AI agents generate more code, enterprises need a system that keeps their software coherent, secure, and modern over time.

Codemod becomes that automation layer.

It reads intent, gathers context across repositories, applies safe transformations, opens pull requests, coordinates execution across teams, and tracks progress from start to finish.

General coding agents create.

Codemod micro agents maintain.

Horizontal tools like Cursor create enormous leverage, and inevitably, some chaos. Vertical platforms like Codemod add orchestration, guardrails, and organization wide consistency, turning that chaos into durable enterprise value.

Together, they allow organizations to move fast without sacrificing the long term health of their systems.